Software testing doesn’t usually make headlines. But with recent major outages impacting the ability to communicate, access critical data, or conduct business, software quality is certainly in the news.

In the financial sector, a single outage can prevent customers from accessing their accounts, transferring funds, or making critical payments, making software quality a high-stakes issue. With AI dominating the headlines, it’s natural to ask: what role should AI play in software testing for financial institutions?

While test automation is already common, it’s natural to believe that AI is coming to replace testers. After all, some financial institutions are already using GenAI to write code; it’s not unreasonable to believe that the same AI should be able to generate and execute tests, identify bugs, and fix code on the fly.

But don’t start cutting testing budgets quite yet. For now, AI-powered testing is best used as a tool that can handle time-consuming, repetitive, and low-value tasks so testers can focus on complex, high-impact work of analyzing the impact of new features on the user experience, identifying and prioritizing high-risk workflows, and ensuring that the software aligns with real-world use cases.

The Role of AI in Testing

Most financial institutions still have a long way to go with test automation before they begin worrying about AI. With critical customer financial data at stake, there’s no margin for error. As a result, it’s not uncommon for these organizations to still rely on legacy testing processes built around versioned releases, manual testing, and a focus on risk aversion.

That said, even the most conservative financial institutions are seeing that this approach no longer reflects the reality that much of their customer experiences now take place over smartphones, tablets, and laptops instead of inside bank branches. They are beginning to leverage a continuous testing approach to not just run more tests, but run smarter and more targeted tests.

So what is the opportunity to use AI to augment your test strategy? Here are three areas where AI can make a genuine difference:

Generating realistic test data: Financial institutions deal with vast amounts of sensitive customer data, including transactions, account details, and personal information. At the same time, they can’t use this data to test their code under real-world conditions. GenAI can help generate synthetic data sets that mimic customer data without putting customer data at risk.

Mapping complex workflows and microservices: Financial transactions are some of the most complex in the world, with common workflows like loan applications taking dozens of steps, touching multiple departments and applications. AI can trace workflows, identify dependencies, and simulate complex user journeys to create realistic test scenarios you can use to validate the workflow at every step.

Aid in regulatory compliance: AI can help demonstrate that a financial app works consistently and in accordance with specific regulations around the globe, generating comprehensive test reports that provide evidence of compliance with industry standards like PCI DSS, GDPR, and SOC 2.

The Intractable Problems of AI in Testing

While AI is a powerful tool, there are critical problems that no tester can ignore.

As you’ve probably experienced in your own use with GenAI, these applications have the habit of making up answers. That’s because Gen AI hallucinates—that is, it gets creative as it tries its best to make up happy and answer your question. It’s also non-deterministic, which means it generates new answers every time you ask the same question, leading to inconsistency.

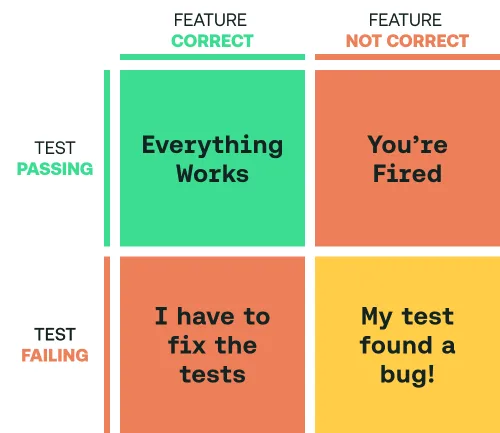

For testing, this is a time bomb, leading to a scenario where the AI says a test should pass based on a statistical probability while the feature is actually broken. When it comes to testing, it’s not test failures you have to worry about; those are bugs you can fix. It’s the tests that pass but shouldn’t, as those tests will give you a false sense of confidence while allowing flawed code to be released into the wild.

Another issue is test volume. While AI can help you run thousands of more tests than you could manually, that is thousands of more tests that you have to manage, review, maintain, and worry about. As a result, you’ll have hundreds of alerts to review at any moment, many of which are of low importance but may obscure the one critical alert you actually need to worry about. It is much better to use test automation to thoroughly test the ~10% of things that are mission-critical so you can focus on keeping those in the green.

The Bottom Line

For financial institutions exploring AI in testing, there is pressure to take action now so you don’t get left behind. However, don’t ignore the cost of misusing AI in the testing process.

Some teams are rejecting AI out of hand, and some are embracing it completely, but financial institutions should instead use AI strategically where it can currently offer the most value. By prioritizing security, compliance, and user experience, you can leverage AI as part of your automated testing process to reduce risks, streamline workflows, and reinforce customer trust. Learn more about automated testing for financial services.