Creating mobile applications has become increasingly accessible due to the availability of toolkits and new programming languages. However, the mobile application testing process is becoming more challenging. Mobile apps are becoming more complex and must be usable via different device types, browsers, operating systems, and network bandwidths. Ensuring the application functions on the latest iPhone does not guarantee it will work adequately on an Android tablet.

Test automation is just one approach to mobile testing -- get the computer to check a wide variety of devices repeatedly, release more frequently, and cover more scenarios.

Mobile Testing Basics

In the simplest terms, there are two approaches to testing a mobile application. You can have humans use the application in different situations to see how it responds (manual testing), or you could have coded instructions drive the application into various scenarios and look for expected results (automated testing).." Both of these approaches can be valid in different circumstances, and that validity can change over time in other moments of an application’s lifecycle. In practice, most organizations blend the two.

Let’s have a closer look at these two testing practices.

What is Manual Testing?

Manual testing involves human testers interacting directly with a mobile application to evaluate its functionality and usability. It requires testers to perform various actions, such as tapping buttons, entering data, and navigating through the app, just like real users would. During manual testing, testers examine the mobile app's behavior across different devices, operating systems, and network conditions. They verify if the app functions as intended, identify and report any bugs or issues they encounter, and assess the overall user experience.

Automation tools have been threatening manual testing for several years. New tools come out yearly with the commercial promise of removing manual testing for good. However, manual testing is still the most common approach across the industry, at least during the initial application development phase.

Manual testing happens in different places. Most programmers at least try out an application, if not put it on an actual device or a simulator/emulator, before passing it on to someone else to test. Some companies have dedicated testing roles to go deeper, looking beyond the happy path or into different device models.

If the software is internal, the company may have the people who will use the software perform user acceptance testing (UAT), which is more focused on "Can I do my job with this software?" Some companies release the software early to "beta" testers, which might be employees, using a tool such as Sauce Mobile Beta Testing. Finally, companies like Applause and Testio exist to take that "beta" version and crowdsource it, providing dozens to thousands of eyeballs to look at the software in a variety of configurations over a short period.

Regardless of who, how, or when, manual testing gives you the natural feeling of using the application. Manual tests can see if the buttons are in the correct position, if they are big enough, if they overlap, if the colors look good together, etc. Computers are not good at evaluating if a picture on a screen "looks right." However, there are some actions computers can test for quickly. For example, when you type in username and password and submit, you should go to a screen showing your name and that you logged in, and leaving the password wrong should yield a particular error text. Manual testing can be time-consuming and may not cover as many test scenarios as automated testing. The challenge of mobile testing is less likely to be which of the two paradigms to accept but how much, when, and who.

You could do manual testing with an actual device or a simulator/emulator, but real devices will give results similar to what your users will experience.

Why Do I Need Manual Testing?

Manual testing offers valuable insights into the application's functionality, usability, and appearance. Testers take on the role of users, thoroughly exploring the application and performing typical actions to detect potential crashes. Through manual testing, testers can also provide feedback on performance, battery usage, and overheating issues, enabling early detection and resolution before the release. The feedback is often "free" just because the tester was paying attention.

How Many Devices Do I Need for Manual Testing?

The number of devices needed for mobile testing can vary based on several factors, including the complexity of your application, the target audience, and the level of test coverage you want to achieve. You could try to test every supported device with every supported operating system version. Still, the number of combinations can easily exceed the time we need to do proper testing. Realistically, most organizations test with the newest version supported, one release back. Sometimes, the oldest version is supported. Given the available devices in the market and considering the geographical distribution of your users, most companies end up with a test lab of 10-20 devices.

What is Automated Testing?

When people use automated testing, they generally mean having a tool, like Selenium or Appium, drive the application's user interface, checking expected results. This checking comes from a series of commands and inspection points stored as coded instructions part of a computer program. Thus, the tests are pre-scripted; every test might be a computer program. Each test might click or type a dozen times and have another dozen verification points. Once the tests exist, if the application behavior has not changed, automated testing can find defects in the application very quickly, typically within minutes of a commit to version control. You can use test automation to run repetitive tests that do not require human discernment, need periodic execution, and can help you find bugs in the early stages.

While manual testing aims to test the user experience, automated testing aims to test all the functionality that characterizes an app. Automated testing will click the button that "looks wrong" and is in the wrong place and not register a problem (unless you thought to check in advance)--it is more likely to find errors, incorrect search results, and so on.

Why Do I Need Automated Testing?

As the application grows, the time to test it grows also. Automated testing brings that time down for frequent releases. That makes automated testing key to speeding up the testing process, decreasing cost, and radically reducing time-to-feedback for significant errors from days to minutes. Automated testing allows you to:

Test functionalities that are repetitive and therefore error prone if performed manually; test cases that have a predictable outcome;

Easily setup and run complicated and tedious test scenarios

Most importantly, you can simultaneously test on more mobile devices, saving time. You can do this without buying or managing the devices using simulators and real devices on the cloud!

How Many Test Cases Do I Need for a Basic Set of Tests?

This will vary widely depending on the application and the "test case" size. If test cases are simple tests that check one logical operation, then a typical function might have four to ten tests, and any given application might have four to ten features. If the application is written in two programming languages, one for iOS and one for Android, you might need to write tests using XCUITest and Espresso, respectively. This doubles the number of coded tests. An alternative is to use Appium to write a single set of coded tests, which gives the advantage of a single code base. Still, on the other hand, the programming language might differ from the one used for the application, and the application might behave differently on each operating system.

Manual Testing vs. Automated Testing: Which to Choose?

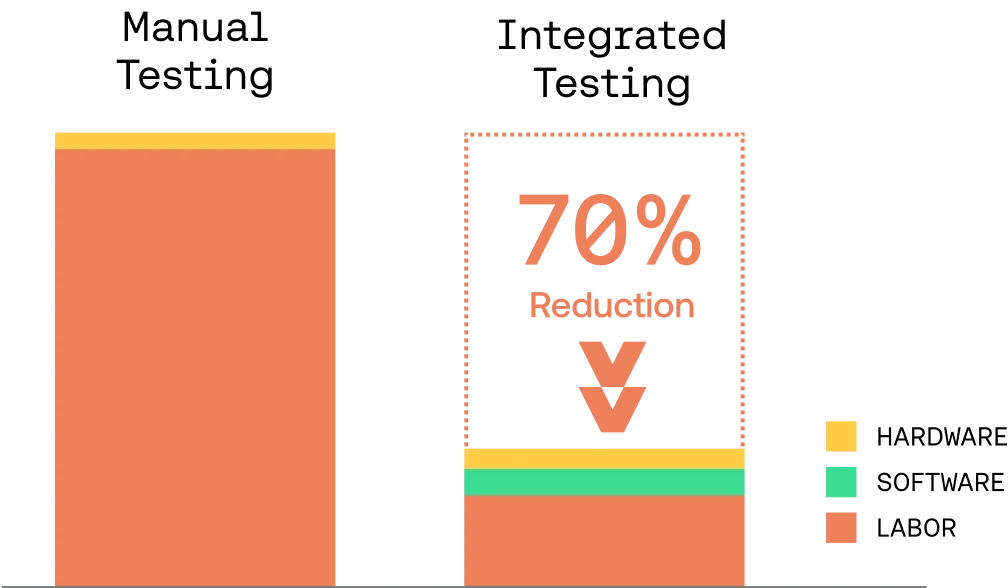

A good option is to use an integrated testing approach that blends manual testing and automated testing. This approach maximized efficiency, time, and money saving. Skipping automated testing results in slower feedback and a reluctance to cut and ship new versions because of the cost; skipping manual testing leaves entire feedback categories ignored. Sauce Labs recommends 80% automated testing and 20% manual testing. Based on research, Sauce expects combining the two approaches in that ratio can help you save up to 70% of your money and time spent testing. These percentages can change based on your app's complexity and concept.

Testing Comprehensively Through The App’s Lifecycle

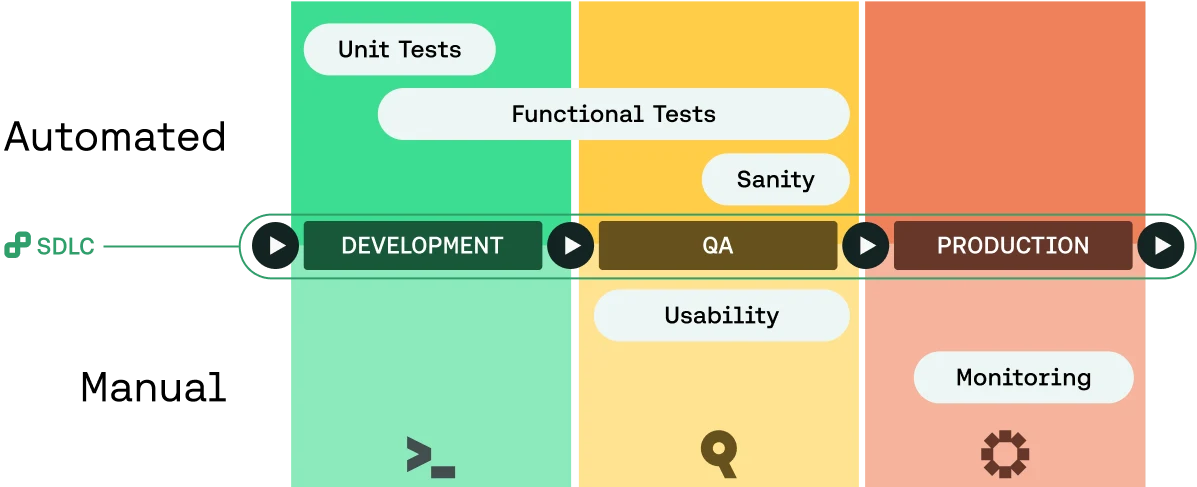

The diagram below shows what tests you should perform through manual testing and which ones through test automation. It also advises an agile approach when developing mobile applications. It is important to note that a tester briefly explores the application while the automated tests are written. The development and testing team will perceive more value if this exploration is done intentionally.

The best mobile app testing strategy integrates both manual testing and automated testing across the software development lifecycle. Both approaches offer many benefits that can help you deliver high-quality mobile apps at speed.