What could be better than using test automation software to validate applications quickly and efficiently? Running those tests at the same time via parallel testing! Parallel testing dramatically reduces testing time, make more efficient use of resources, and gets your features to market faster.

That said, there are some important caveats to keep in mind to make the most of parallel testing, as well as some pitfalls that you may have to work around to avoid disrupting parallel test workflows.

Keep reading for tips on how parallel testing works, why it's beneficial, when to use it, and which best practices help to make parallel tests as effective as possible.

What is Parallel Testing?

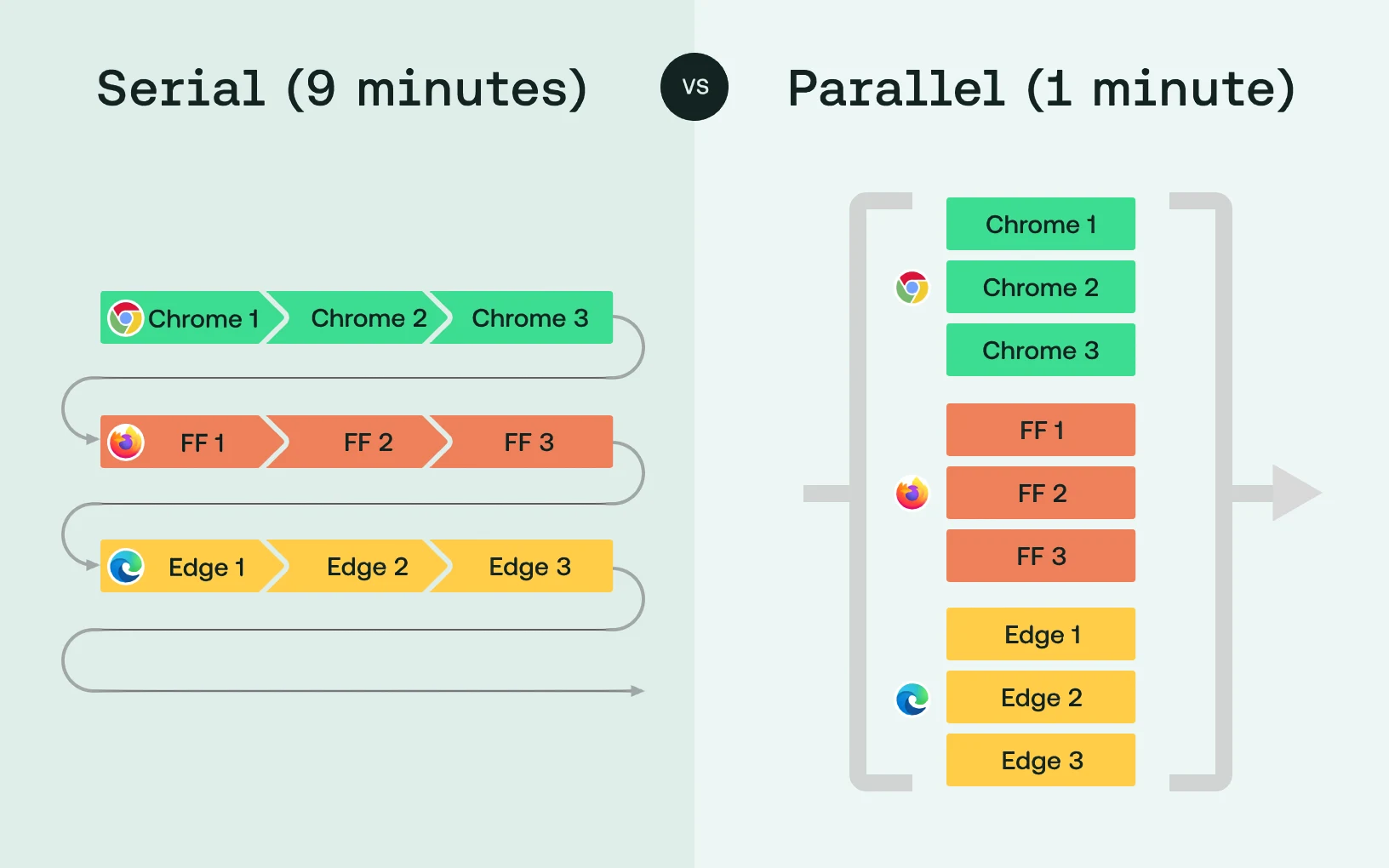

Test parallelization is the practice of executing multiple software tests simultaneously. It's the opposite of serial testing, which requires one test to finish before starting another.

Shorten Test Run Time

The most common use case for parallel testing is in the execution of a suite of functional tests. Imagine if you had a suite of 120 Selenium tests, which takes an average of 2 minutes per test to complete. Running serially on one browser or mobile device, this suite would take 4 hours (120 tests x 2 minutes = 240 minutes) to run.

Now imagine running this suite in parallel, with enough compute power to run all tests at the same time.

It would take 2 minutes.

To extend the example, imagine that you needed to run this test on Chrome, Edge, Safari, Firefox, Safari-mobile AND Android-Chrome. If you had enough compute power, you could still run the entire suite in 2 minutes.

If you only had enough compute power to run on one platform at a time, it would take 12 minutes (2 minutes for 6 different platforms). If you only had enough power to run half of the tests at a time, it would only take double that, which is still a far sight faster than running serially.

No matter how you crunch the numbers, you can get far more coverage in far less time by running tests in parallel.

What Are the Advantages of Parallel Testing?

The benefits of parallel testing include:

Broader test coverage in the same period: By executing many tests simultaneously, you can perform more tests in the same amount of time. That means you can test across more devices, operating systems, and browser configurations to gain greater confidence that your app works as required.

Less testing time: Parallel testing can reduce the total time required to perform tests. For example, if you have ten tests that each takes two minutes to complete, running them in parallel lets you complete the full test routine in two minutes. With serial testing, you'd be waiting 20 minutes for those tests to finish.

Resource efficiency: Parallel testing helps make the most efficient use possible of available testing resources. Each individual test requires a minuscule amount of CPU/memory, leaving a lot of idle resources. With parallel testing, you can run as many simultaneous tests as your infrastructure can handle. This is a particularly important advantage if you're paying for access to test infrastructure by the minute and need to optimize resource efficiency to save money.

In short, parallel testing is a great way to run more tests, in less time, with more efficient use of resources, and at a lower cost.

How Does Parallel Testing Work?

Although you could theoretically run parallel tests manually if you had multiple people performing each manual test at the same time, parallel testing usually relies on automated test frameworks to execute multiple tests automatically and simultaneously.

You can perform parallel testing using any test automation framework that supports it, using whatever language available.

To set up parallel tests, perform these steps:

Decide which tests you will run in parallel. As noted below, some tests shouldn't or can't run in parallel, so you need to determine which tests are a good fit.

Write the tests. Write code to automate each test to be run in parallel.

Schedule tests: Some test automation frameworks provide built-in schedulers that you can use for this purpose, or you could use an external scripting tool to trigger tests based on a schedule.

Execute the tests. Let the tests run, being sure to monitor them to ensure that they all execute as required.

Examine test results. If any tests fail, determine why, and compare results from multiple tests to assess whether the issues that triggered the failure affect multiple configurations or are specific to one device, operating system, or browser.

You probably don't need any special tools to run tests in parallel. If you're already using automated testing, turning your automated tests into parallel tests does not require extensive work. The only variable is the framework and platform you're using--some support parallelization better than others, and some platforms aren't conducive to parallel testing.

Some platforms (iOS simulator, for example) only allow you to run a single parallel thread per computer, which would require multiple separate machines to be set up. This is why a cloud solution might be best--they set up the environment, and all you need to do is send over the tests!

Parallel testing examples

As examples of tests that are good candidates to run in parallel, consider the following tests written for different frameworks.

Selenium parallel testing example

This Selenium test is a good fit for test parallelization because it has no data dependencies and could be executed across multiple operating systems simultaneously:

1import org.openqa.selenium.WebDriver;2import org.openqa.selenium.firefox.FirefoxDriver;3import org.openqa.selenium.support.PageFactory;4import org.testng.annotations.Test;567public class SeleniumTest {89@Test10public void testSelenium() {11// Create a new instance of the Firefox driver12WebDriver driver = new FirefoxDriver();1314// Navigate to the Google home page15driver.get("http://www.google.com");1617// Instantiate a new Page Object and populate it with the driver18GoogleHomePage page = PageFactory.initElements(driver, GoogleHomePage.class);1920// Perform a search on the page21page.searchFor("Selenium");22}23}

Appium parallel testing example

Here's a similar test you could run in Appium across multiple device types at the same time:

1import org.junit.Test;2import org.openqa.selenium.By;3import org.openqa.selenium.WebDriver;4import org.openqa.selenium.WebElement;5import org.openqa.selenium.remote.DesiredCapabilities;6import org.openqa.selenium.remote.RemoteWebDriver;78public class AppiumTest {910@Test11public void test() throws MalformedURLException {1213// Set the Desired Capabilities14DesiredCapabilities caps = new DesiredCapabilities();15caps.setCapability("deviceName", "My Device");16caps.setCapability("platformName", "Android");17caps.setCapability("platformVersion", "9.0");1819caps.setCapability("appPackage", "com.example.android.myApp");20caps.setCapability("appActivity", "com.example.android.myApp.MainActivity");2122// Instantiate Appium Driver23WebDriver driver

Playwright parallel testing example

And in Playwright, here's a test that could run across different operating systems:

1const { chromium } = require('playwright');23(async () => {4const browser = await chromium.launch({ headless: false });5const page = await browser.newPage();6await page.goto('https://www.google.com');78// Type into search box.9await page.type('#search input', 'Hello World');1011// Click search button.12await page.click('input[type="submit"]');13})();

Challenges and Limitations of Parallel Testing

While parallel testing can speed and optimize testing in many cases, it has its limitations. Parallel testing may not work for tests where the following challenges will create issues for efficient test execution:

Inter-dependent tests: If you have a test that requires another test to be complete before the test can run, you won't be able to run the tests in parallel. This limitation applies most often in situations where you don't want to start one test if a previous test results in a failed state, in which case something is wrong and you want to avoid wasting time and resources running additional tests until you fix the issue that triggered the failure in the one test.

Data dependencies: If multiple tests require access to the same data, such as an entry in a database, running the tests in parallel can be difficult or impossible because each test will be trying to fetch or alter the same data at the same time. Parallel tests should not have data dependencies.

Infrastructure limitations: If your infrastructure is capable of supporting only a limited number of tests at one time, you'll have to limit test parallelization. Attempting to run more simultaneous tests than your infrastructure can handle can result in tests that fail--probably inconsistently--due to a lack of available resources.

Best Practices for Parallel Testing

To make the most of parallel testing, adhere to these key principles:

Write atomic tests: Atomic tests are tests that evaluate one specific thing rather than trying to test many things at once. Managing parallel tests – and avoiding situations where tests compete for resources – is easier when each test is atomic.

Write autonomous tests: An autonomous test is a test that can run at any time without depending on other tests.

Keep tests similar in length: To make the most of parallel testing, design each test so that it will take approximately the same amount of total time to run. Otherwise, you could end up with a testing routine where most of your tests have finished, but you are still waiting on one of them, which is inefficient.

Be strategic about which tests to run: Even with parallel testing, there are limits on how many tests you can run at once, so you'll need to be deliberate about what you test for and which configurations or environments you test on. In other words, don't treat parallel testing as a license to run infinite tests.

Run Parallel Tests on Sauce Labs

With support for all major browsers and thousands of individual devices, the Sauce DevOps Test Toolchain makes it easy to run parallel tests whenever and however you want. Take advantage of the Sauce Labs platform to access test infrastructure on demand, and take advantage of tools for monitoring tests and troubleshooting failures across any test within your test parallelization suite.