We know automation is important, so now we need to determine which tests to automate. But if automation is so valuable, shouldn’t we automate everything? As great as that sounds, the answer is a definite no. The fact is that automation takes time. It takes time to implement and it takes time to maintain, so we have to think critically about what to automate.

I believe you should prioritize automating tests that have high value for you, the team, and your organization as a whole. For example, if you’re testing an online shopping site, the checkout process may hold the highest value for your organization simply because of the revenue potential.

So, how do you identify what you do want to test? The first step is to identify the main application flows that must always work. Ask yourself a few key questions:

How bad is it if this feature/behavior breaks?

How much value does the test have?

How big is the risk that mitigates?

Using our example of an online shopping site, we might decide that the following application flows are the most critical:

Users can login

Users can register their accounts

Product images display correctly

Items can be added to the shopping cart

Payment can be collected

It’s important to know that you don’t need to write hundreds of tests to have your website tested! As in the example above, we can start with five prioritized tests for now, and then use analytics and user traffic data to help you evaluate the answers and determine the browsers, versions, and operating systems to test. Ultimately, using our example, we could have 5 tests executed in different browser, version, and operating system combinations, which then would give us around 200 test executions.

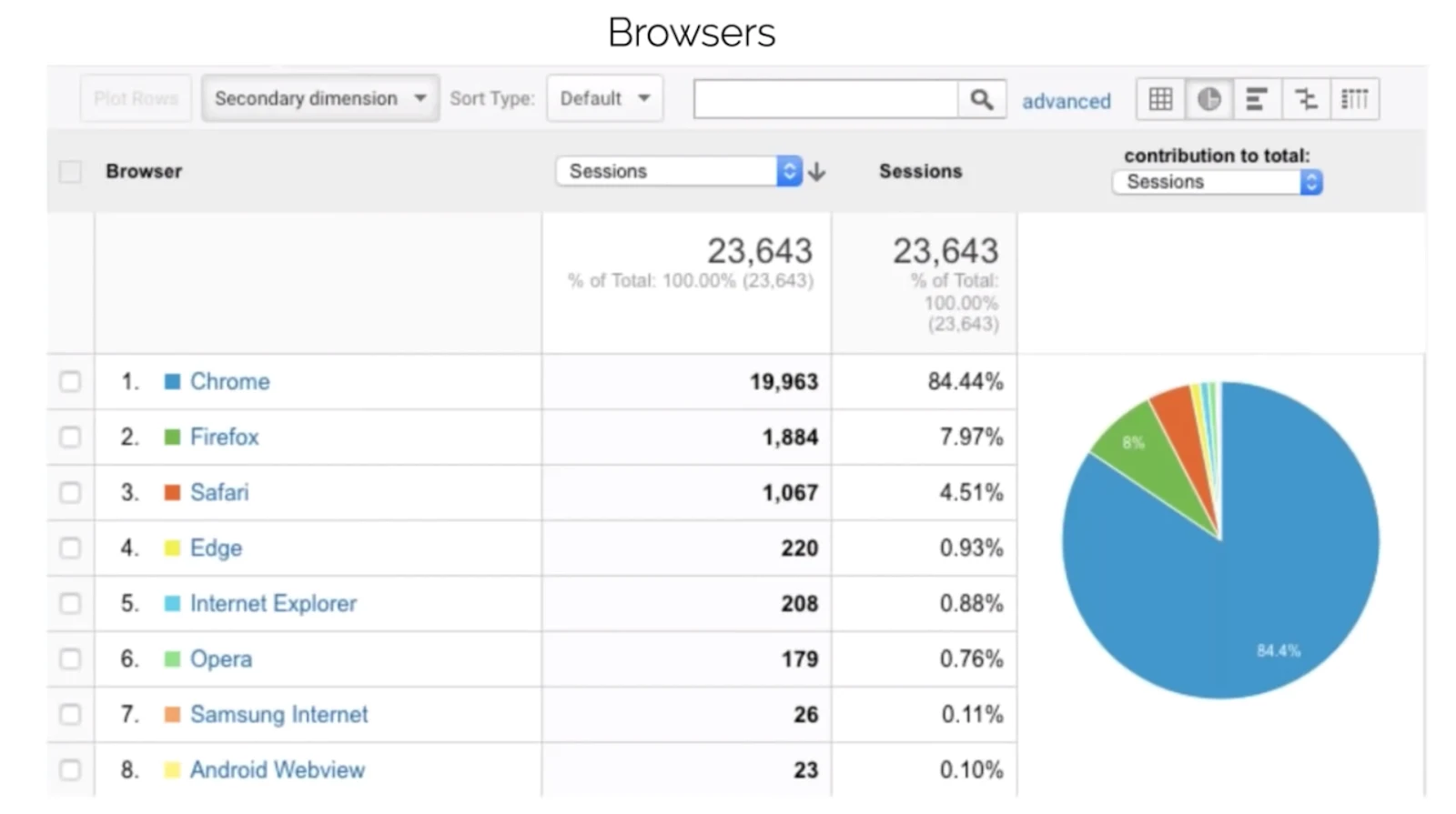

To put this into practice, you might look at the data and determine that the majority of your site users are using Chrome, Firefox and Safari.

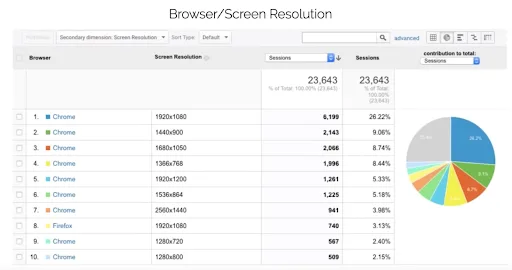

Then, you can look further to determine the browser versions that are used most often, and then do the same for OS and device.

Based on our research thus far, we have identified our key application flows that will give us the most value. These will give us security knowing that testing these lowers the risk of something going wrong. Also, by looking at the analytics, we’ve decided to test on:

Three different browsers (Chrome, Firefox, Safari)

Two different OS (Windows, OS X)

Three different screen resolutions

Seven different browser versions (Chrome 71-74, Firefox 64-65, Safari 12)

This gives us the assurance that we are automating the highest value tests on the platforms being used most often.

Now that we know WHAT to automate, let’s turn to a few best practices for making your automated tests successful. Here are five best practices.

Focus on reusability and maintainability. Avoid code duplication across tests and helper classes/methods.

Every test must be autonomous. Tests can run in any order without depending on each other!

Write tests and code only for the current requirements. Avoid complex designs that consider potential future use cases.

Get familiar with software design patterns. They can benefit automation testing as well.

Base your work on testing plans and/or strategies, not on tools. Don’t choose a tool and then look for ways to use it. Start with your strategy and plan, and only then should you consider the tools that can help.

If you enjoyed this post (part 4 in a series), check out the other installments of Diego’s “5 Steps to Jumpstart Test Automation” series.